Overview

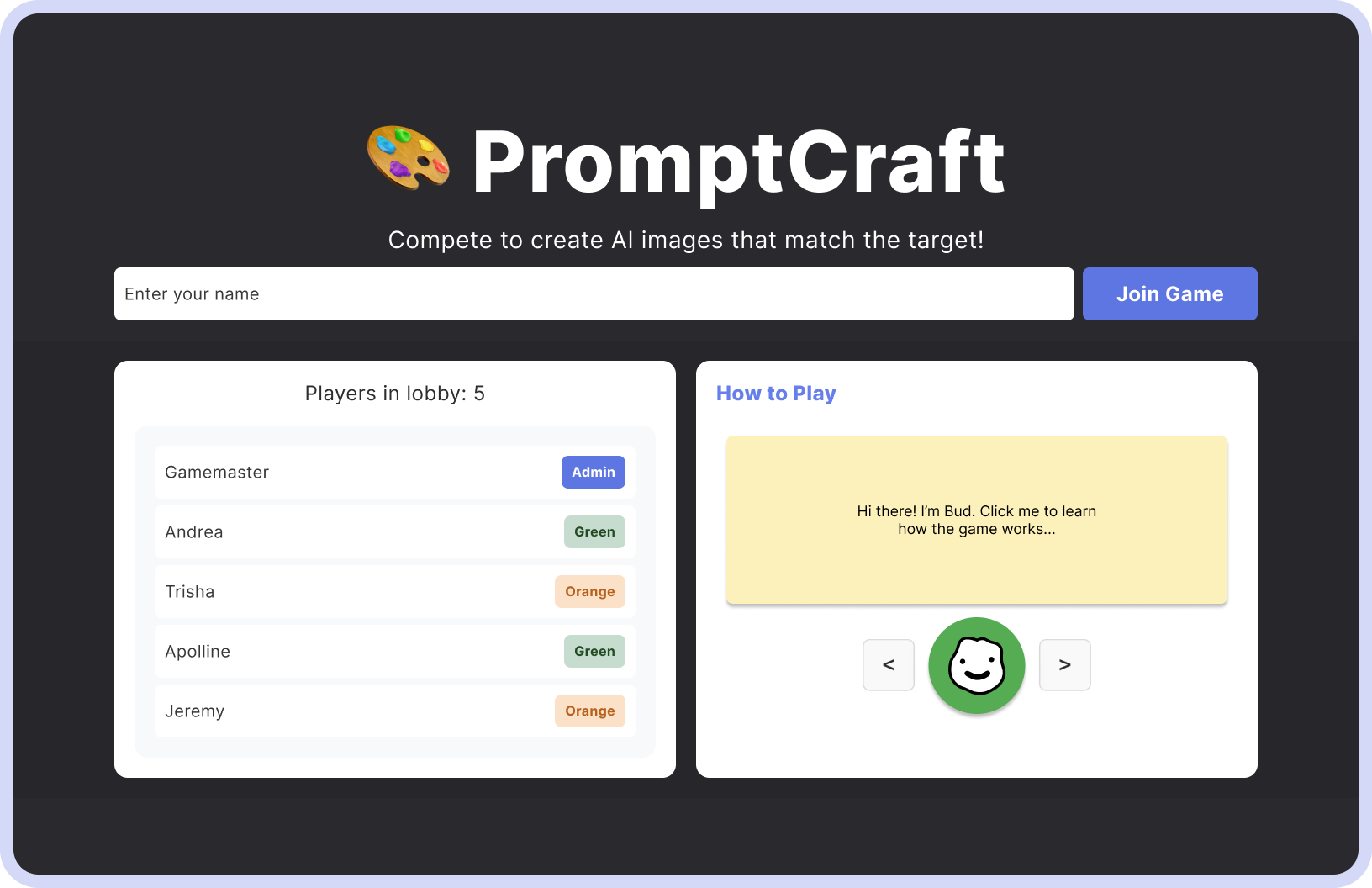

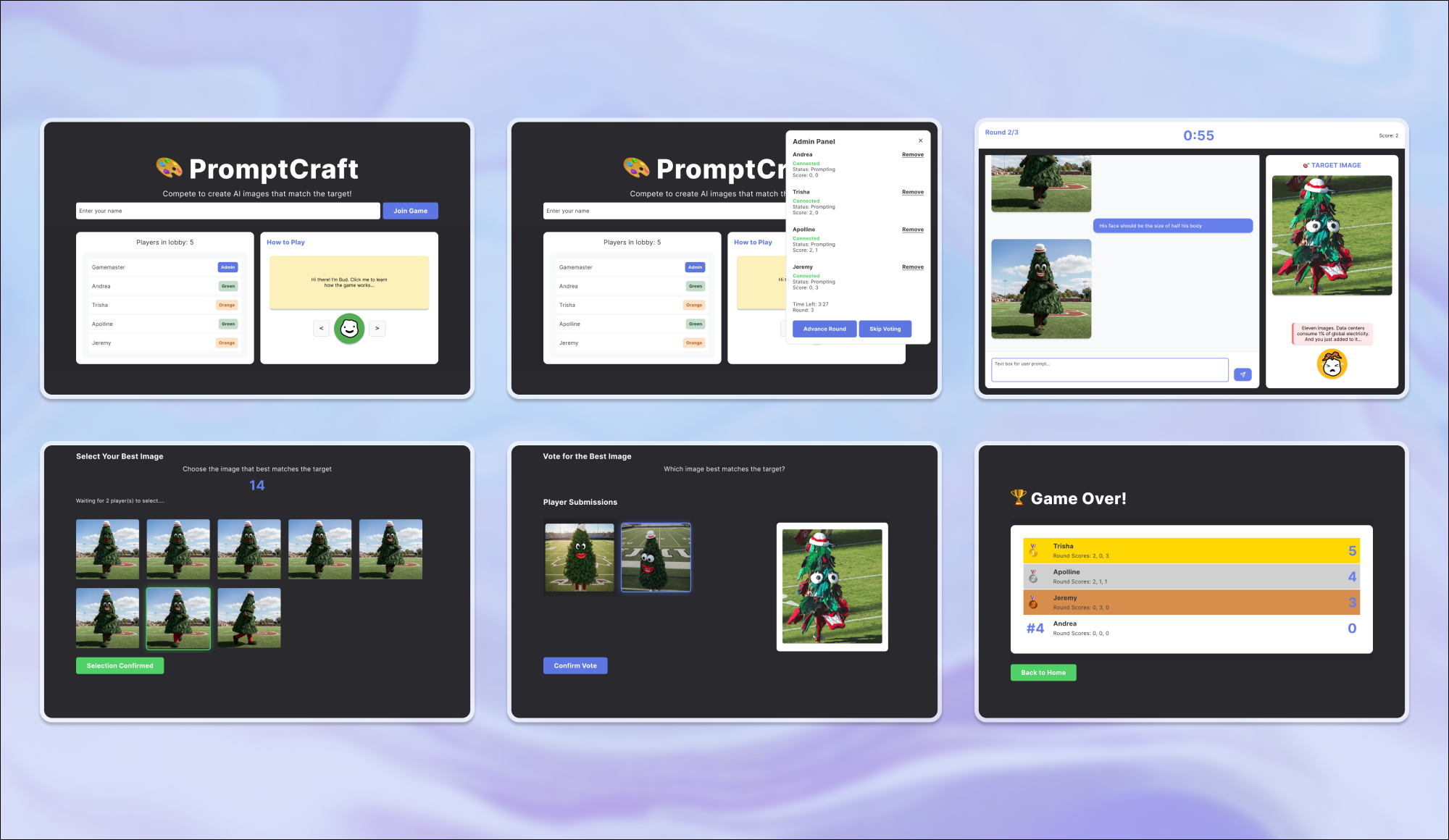

As the product manager on a cross-functional team of two engineers and a UX researcher, I led the design and delivery of 🎨 PromptCraft, a gamified AI image-generation experience that explored whether surfacing AI's environmental costs could shape user behavior.

While effects were modest, users deliberated longer and submitted fewer prompts, highlighting how experience design and behavioral nudges influence interaction patterns.

Problem Framing & Goals

AI image generation entails high environmental costs that compound at scale, yet users lack feedback loops that link their actions to their impacts. Drawing on secondary research showing both the magnitude of aggregated AI energy use and the effectiveness of guilt to encourage pro-environmental behavior, we set out to explore whether experience design could surface these costs in a way that meaningfully shaped user behavior.

When exposed to environmental feedback, we hypothesized users would:

↓ Reduce prompt volume (North Star Metric)

↑ Increase time to first prompt, signaling increased deliberation

↓ Increase prompt length as a proxy for front-loaded effort and optimization

Beyond the core research question, I defined two other product goals for the team:

- Vibe code a complete experience from 0 to 1 and analyze real user behavior to mirror industry product development.

- Make the experiment fun by framing it as a game; keeping participants motivated and reducing drop-off.

Building the Solution

Developing Treatment: Meet Bud and Spud!

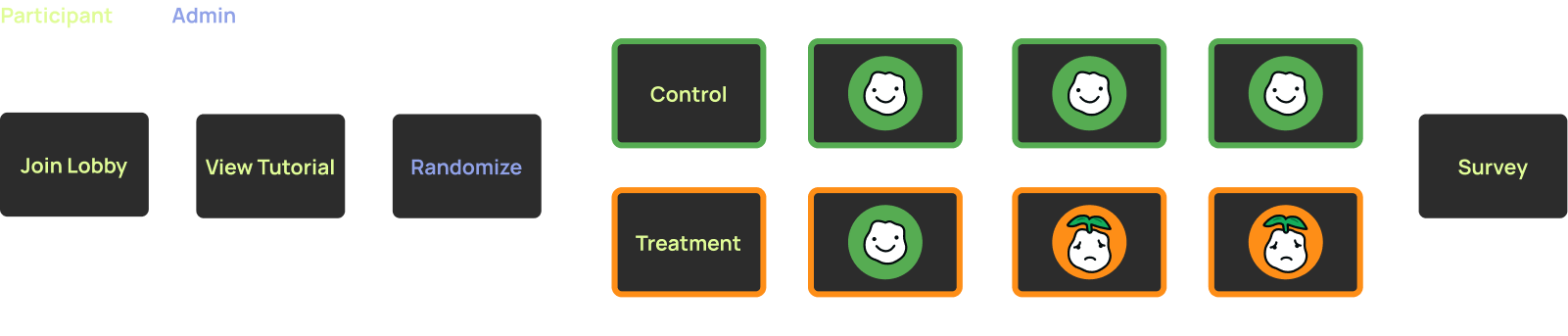

To surface environmental costs, I designed Bud and Spud, characters who delivered control messages and environmental feedback after each prompt, respectively.

Spud’s guilty pleas escalated with continued prompting, shifting from light humor to visible distress. In contrast, Bud remains neutral throughout as the control experience.

Designing Gameplay and the User Journey

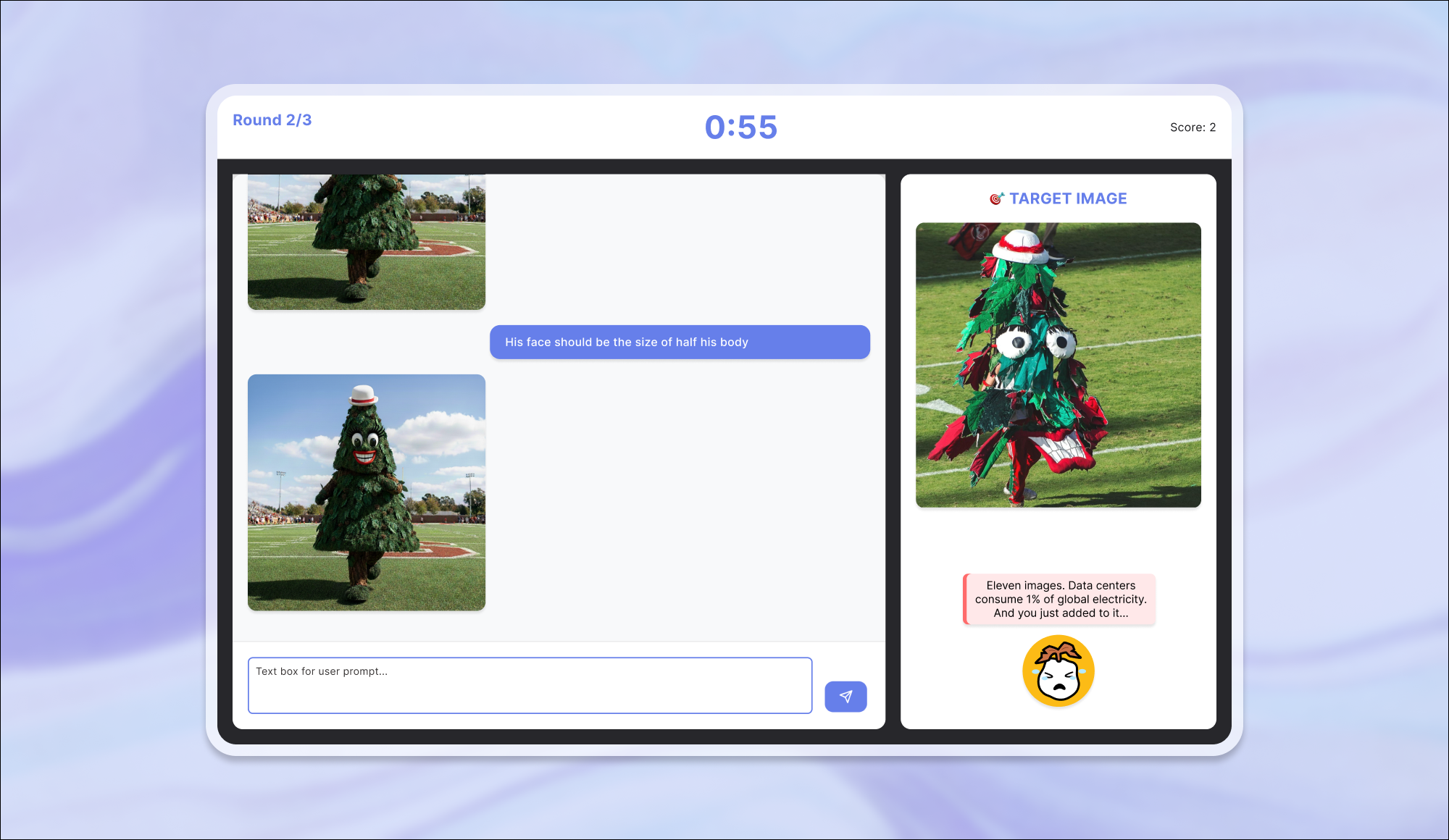

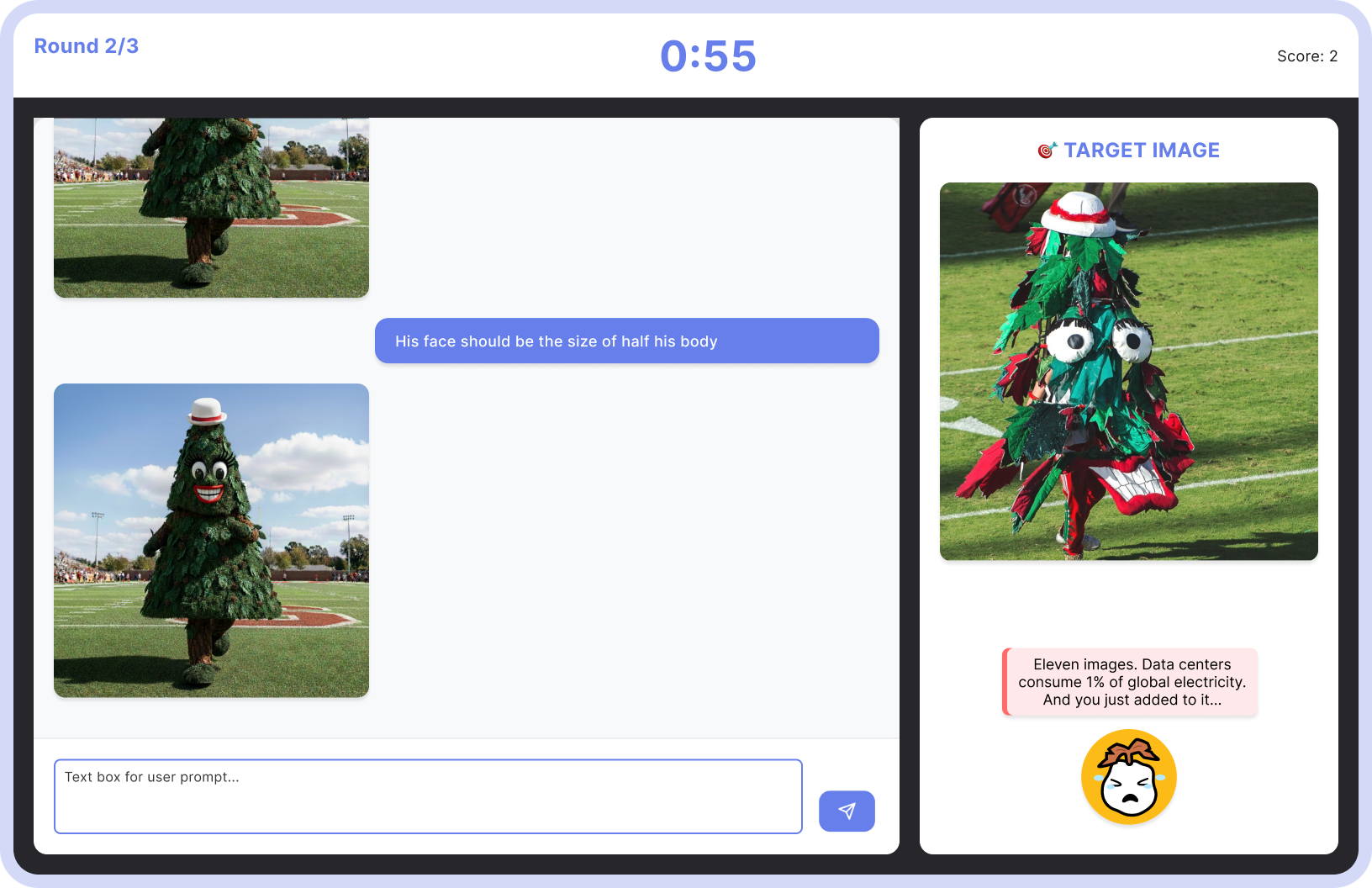

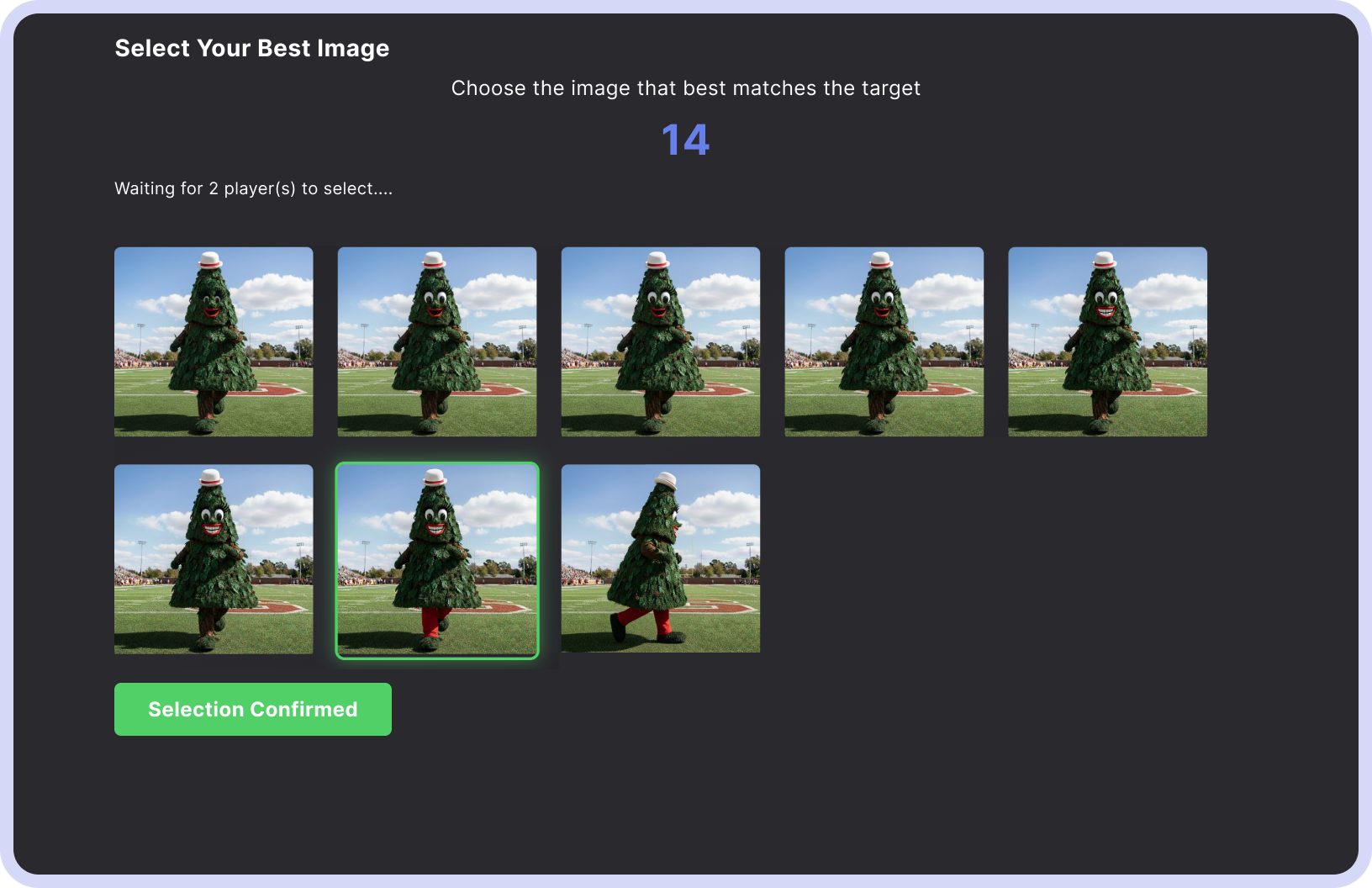

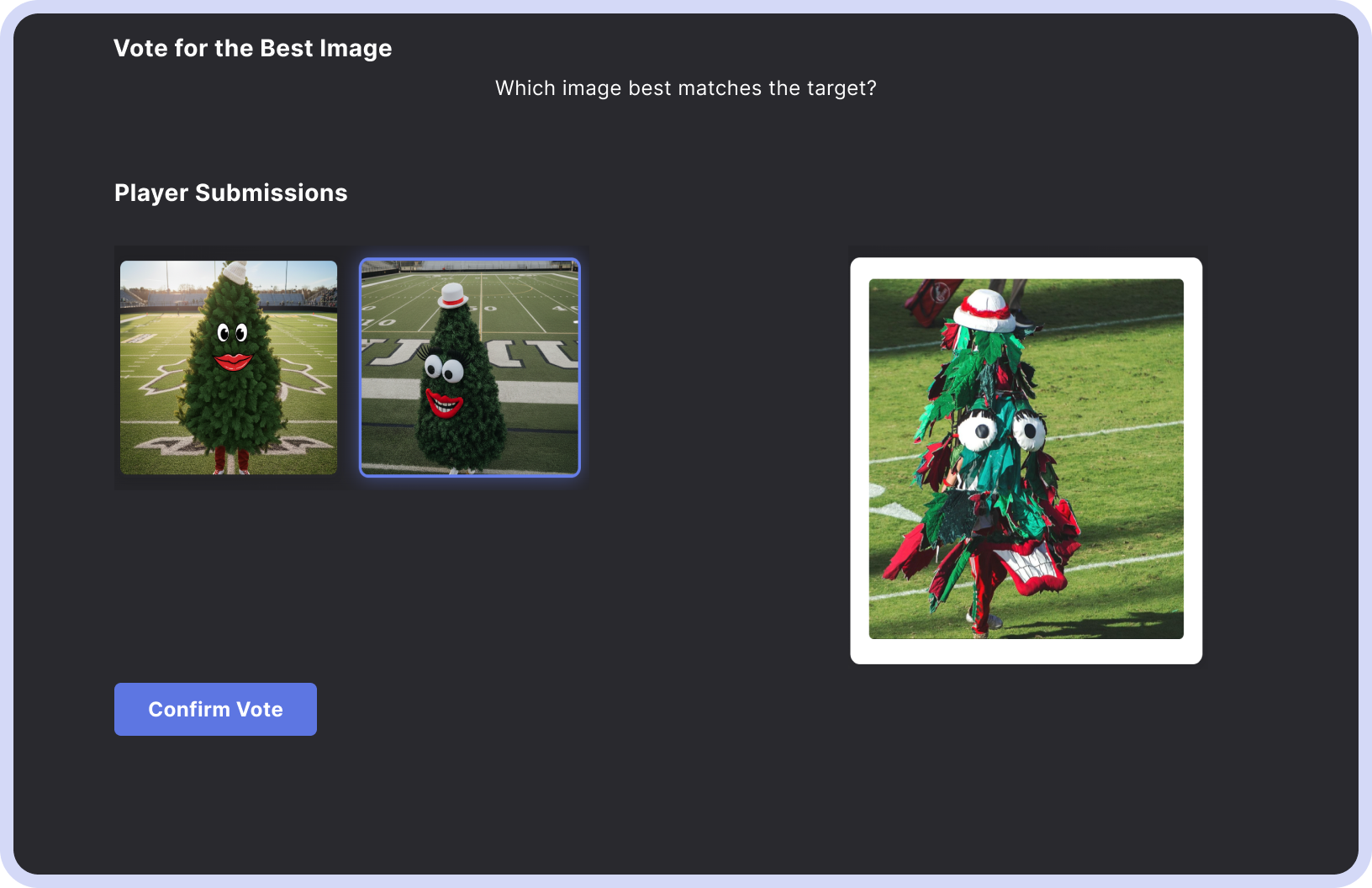

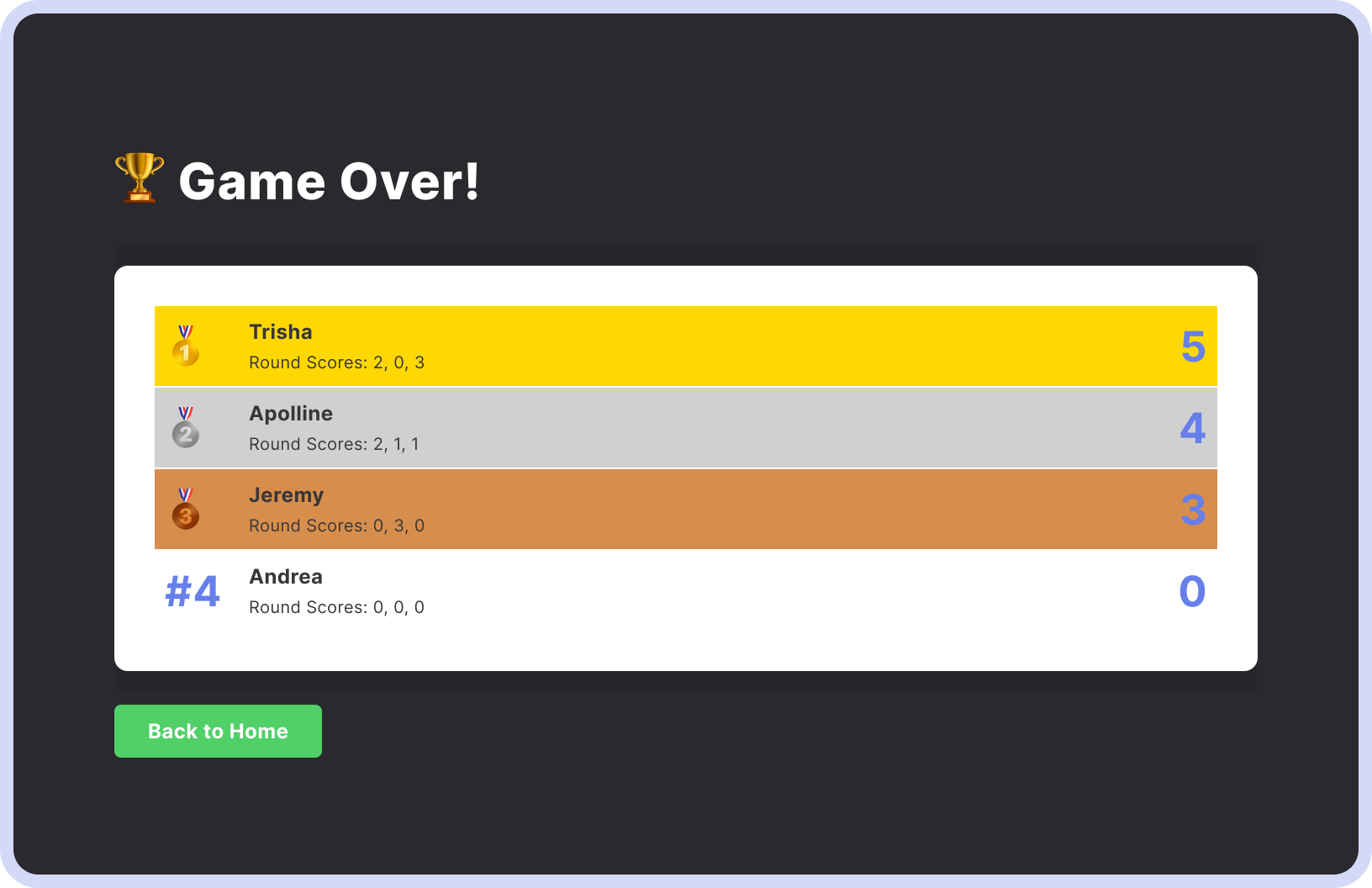

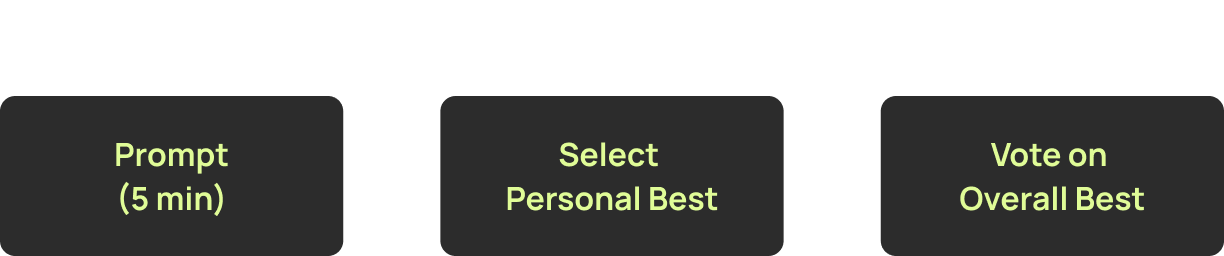

I developed the end-to-end user journey to meet the experiment and product requirements, while keeping the experience accessible and engaging. To gamify the experience, players earn votes from peers in each round based on their outputs. In each round, participants have 5 minutes to generate images, then select their personal best, and vote on everyone's submitted creations.

To address skill-based variance, I scoped a short onboarding tutorial to ensure players could participate regardless of prior AI familiarity. While viewing the tutorial, the Admin randomly assigned players to the control or treatment groups.

Both groups encounter Bud (Control) in the first round to establish a baseline for natural prompting propensity. Each round was limited to five minutes, a constraint informed by earlier testing, where longer sessions led to disengagement. After three rounds, all participants completed a post-game survey, developed by our UX researcher, to capture qualitative insights.

Tradeoffs and Constraints

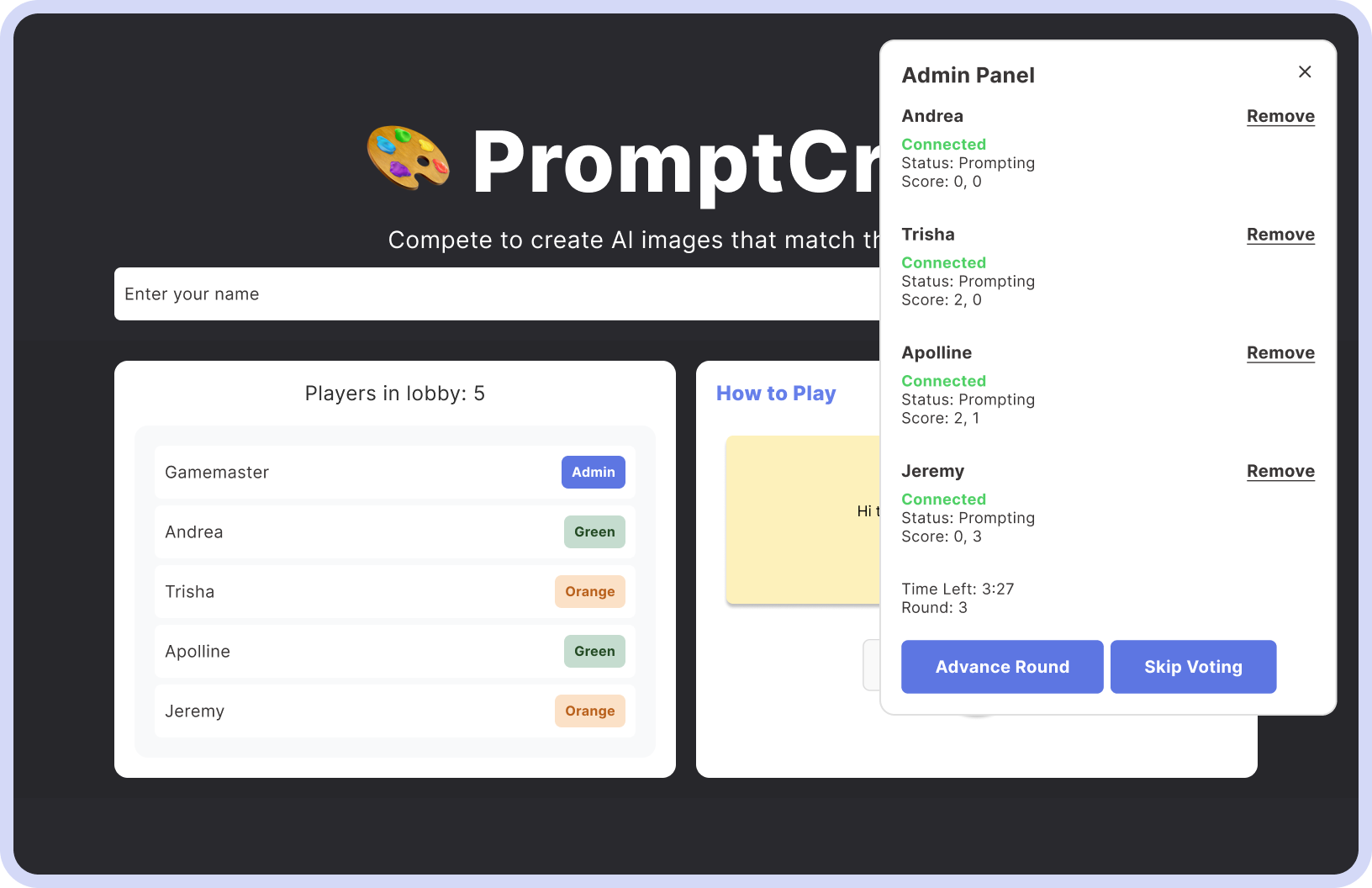

Ideally, 🎨 PromptCraft would have been a fully standalone game. However, to control API costs and accommodate the instability inherent in a rapidly vibe-coded application, we introduced an Admin "Gamemaster" role as a failsafe.

This tradeoff improved reliability and data quality. The admin could monitor player activity in real time, remove disconnected users, and advance rounds from the backend when needed. While this reduced scalability, it allowed us to ship an effective MVP experience within tight time and cost constraints.

.png)

.png)